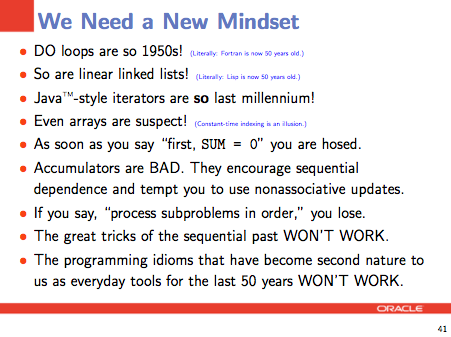

At Strange Loop 2010, Guy Steele gave a wide-ranging, excellent talk in which the key point was:

In essence, his notion is to use a divide-and-conquer approach, which he described as “map-reduce in the small” (or some similar phrase). This is analogous to techniques used to partition work in large distributed systems, but inside a single program.

I heartily agree with all of this. Massive multicore will be a dominant factor in software design in the coming decade. In 2010, most of us are happily waiting in a calm before a storm, because our multicore machines don’t have very many cores yet. For most applications, we get by with very coarse parallelism (such as one thread per concurrent user request being served, in an application server or web server). This won’t last – when cheap PCs have 50+ cores, most software will need to harness parallelism in a much more fine-grained way. Allocating only one core per concurrent operation will become ridiculous.

You can download Steele’s slides from the Strange Loop site, or watch this video of his previous talk at ICFP 2009 in which he covered some of the same material. At Strange Loop, Steele showed how to solve a particular problem (counting words in a string) in a manner amenable to parallel processing. His sample code was written in Fortress, a Sun-Oracle research language. Fortress didn’t bother me, but I heard some discussions about the language choice (and rapid presentation) as an obstacle to detailed understanding.

I propose to elaborate these ideas by walking through sample code, in a series of talks.

Talk Proposal: Map-Reduce in the Small

First, I will briefly summarize the need for fine-grained parallelism.

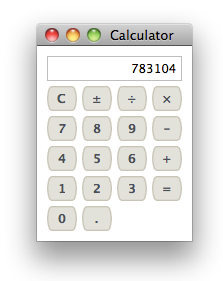

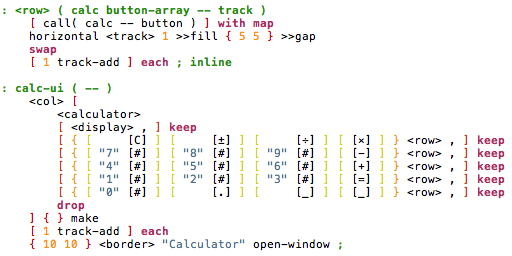

Then, I will present three code walkthrough examples in a widely used language.

- The word-count problem from Guy Steele’s Stange Loop talk.

- Another simple algorithmic / computer-science-flavored example.

- As time allows, a third example from a thoroughly practical, enterprise-app-flavored problem space.

This talk will use very few slides; instead it will be all about the code. Each example will show how (not just why) to parallelize algorithms.

An Array[0..N] of Talks

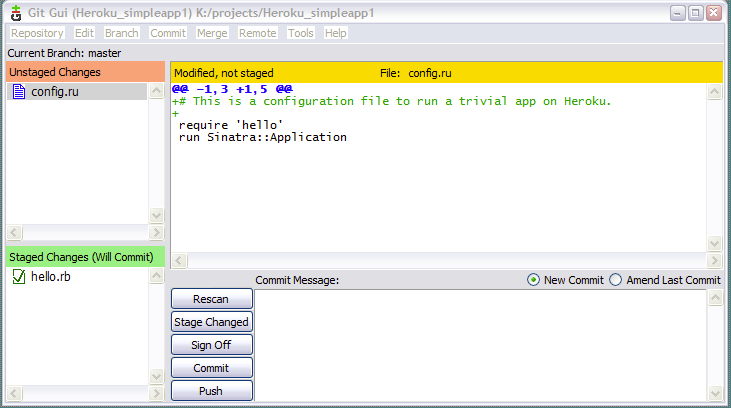

This idea is worthy of deep understanding and practice, so I have in mind several talks, each using a different language, and with sufficient differences to be interesting even for someone who happens to be present for all of them:

- Examples in Java, at the St. Louis Java user group

- Examples in Clojure, at the St. Louis Clojure Lunch Cljub (there are already some good Clojure examples out there, making this one easy, but still worthwhile).

- Examples in another language (perhaps more esoteric, to be determined later), at Lambda Lounge

- A repeat of one of those languages, with updated examples, at Strange Loop 2011 (no web site yet!)

Putting these together will take a while, so I have in mind spreading these over the next year or so. Of course, it is quite possible that only a subset of these groups/events, possibly an empty set, will accept this talk offer. In that case, I’ll take it as a sign that the St. Louis community already understands micro-parallelism in depth, and celebrate!

Update: Schedule

As these talks are scheduled, I will put the information here:

- Java code at the St. Louis JUG: Jan 13, 2011.