Last year, I wrote about my efforts to find a storage server with lots of storage at a low cost-per-byte. What was obvious to me at the time, but apparently not obvious to many vendors, is that the key to cost effective storage is to buy mostly hard drives and as little else as possible. I built on Linux and commodity hardware, but the principle applies regardless of OS or hardware vendor.

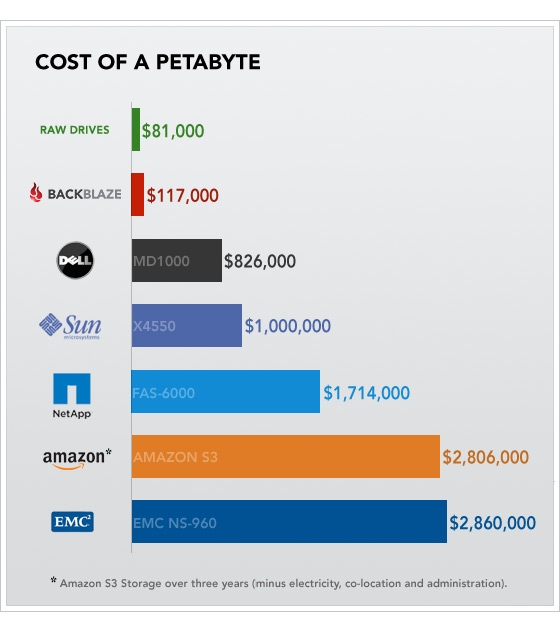

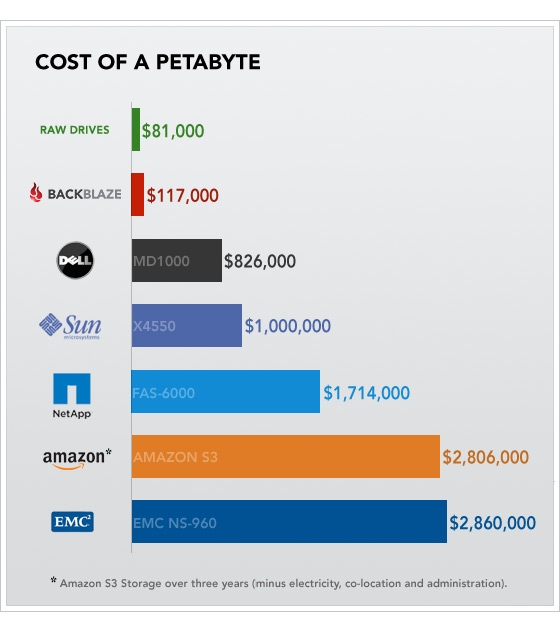

The team at BackBlaze went much farther down the same path. They ended up with a custom made 4U case (a bit expensive) while the rest of the parts are few in number, inexpensive, and off the shelf. Their cost overhead is stunningly low, as seen in this chart (which I copied from their article):

Is this right for everyone? Of course not. Enterprise buyers, for example, may need the extra functionality offered by the enterprise class solutions (at many times the cost). Cloud providers and web-scale data storage users, though, simply cannot beat BackBlaze’s approach. What about performance? Clearly this low-overhead approach is optimized for size and cost, not performance. Yet the effective performance can be very high, because this approach makes it possible to use a very large number of disk spindles, and thus has a very high aggregate IO capacity.

Predictably, the response to BackBlaze’s design has been notably mixed, with numerous complaint about performance and reliability. For a very thoughtful (though unavoidably biased) response, read this Sun engineer’s thoughts.

The key thing to keep in mind is the problem being solved. BackBlaze’s design is ideal for use as backup, bulk storage. That is a very common need; the solution I set up (described at the link above) had a typical use case of a given file being written once, then never read again, i.e. kept “just in case”. Reliability, likewise, is obtained as the system level, by having multiple independent servers, preferably spread across multiple physical sites. Once you’re paying the complexity cost to achieve this, there isn’t much additional benefit to paying the cost a second time in the form of more expensive storage.