Life is Risk

Whenever you hire out work, either to a person, to a team, or to a company, there are risks. These risks can easily prevent the work from being completed, and even more easily prevent it from being completed on time. (I’m thinking mostly of software development work as I write this, but most of this applies to other domains as well.)

What could go wrong with the person/team/company you hire?

- They get distracted by family or personal issues.

- They turn out to not be as qualified or capable as they appeared.

- They leave for better work. Sure, you might have a contract requiring them to finish, but your lawsuit won’t get the work done on time.

- They turn out to not be as interested in your work as they first appeared.

- They start with an approach which, while initially appearing wise, turns out to be poorly suited.

- Illness or injury.

Of course you should carefully interview and check reputations to avert some of these risks, but you cannot make them all go away. You don’t always truly know who is good, who will produce. You can only estimate, with varying levels of accuracy. The future is unavoidably unknown and uncertain.

But you still want the work done, sufficiently well and sufficently soon. Or at least I do.

Redundancy Reduces Risk

A few years ago I stumbled across a way to attack many of these risks with the same, simple approach: hire N people or teams in parallel to separately attack the same work. I sometimes call this a RAIT, a Redundant Array of Independent Teams. Both the team size (one person or many), and the number of teams (N) can vary. Think of the normal practice of hiring a single person or single team as a degenerate case of RAIT with N=1.

To make RAIT practical, you need a hiring and management approach that uses your time (as the hirer) very efficiently. The key to efficiency here is to avoid doing things N times (once per team); rather, do them once, and broadcast to all N teams. For example, minimize cases where you answer developer questions in a one-off way. If you get asked a question by phone, IM, or email, answer it by adding information to a document or wiki; publish the document or wiki to all N teams. If you don’t have a publishing system or wiki technology in hand, in many cases simply using a shared Google Document is sufficient.

There are plenty of variations on the RAIT theme. For example, you might keep the teams completely isolated in terms of their interim work; this would minimize the risk that one teams’ bad ideas will contaminate the others. Or you might pass their work back and forth from time to time, since this would reduce duplicated effort (and thus cost) and speed up completion.

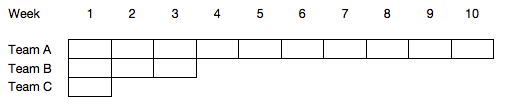

Another variation is to start with N teams, then incrementally trim back to a single team. For example, consider a project that will take 10 weeks to complete. You could start with three concurrent efforts. After one week, drop one of the efforts – whichever has made the least progress. After three weeks, again drop whichever team has made the least progress, leaving a single team to work all 10 weeks. As you can see in the illustration below, the total cost of this approach is 14 team-weeks of work.

How might you think about that 14 team-weeks of effort/cost?

- It is a 40% increase in cost over picking the right team the first time. If you can see the future, you don’t need RAIT.

- It is a 50% decrease compared to paying one team for 10 weeks, realizing they won’t produce, then paying another team for 10 more weeks.

- If you hired only one team, which doesn’t deliver on time, you might miss a market opportunity.

Still, isn’t this an obvious waste of money?

To understand the motivation here, you must first understand (and accept) that no matter how amazing your management, purchasing, and contracting skills, there remains a significant random element in the results of any non-trivial project. There is a range of possibilities, a probability function describing the likelihood with which the project will be done as a function of time.

RAIT is not about minimizing best-case cost. It is about maximizing the probability of timely, successful delivery:

- To reduce the risk of whether your project will be delivered.

- To reduce the risk of whether your project will be delivered on time.

- To increase your aggregate experience (as you learn from multple teams) faster.

- To enable bolder exploration of alternative approaches to the work.

What projects are best suited for RAIT?

Smaller projects have a lower absolute cost of duplicate efforts, so for these it is easier to consider some cost duplication. RAIT is especially well suited when hiring out work to be done “out there” by people scattered around the internet and around the world, because the risk of some of the teams/people not engaging effectively in the work is typically higher.

Very important projects justify the higher expense of RAIT. You could think of high-profile, big-dollar government technologies development programs as an example of RAIT: a government will sometimes pay two firms to developing different designs of working prototype aircraft, then choose only one of them for volume production. For a smaller-scale example, consider the notion of producing an iPhone app or Flash game for an upcoming event, where missing the date means getting no value at all for your efforts.

Thanks to David McNeil for reviewing a draft of this.