Some developers love HTML: its syntax, its angle brackets, its duplicated tag names, its scent, its silky smooth skin. If you are among them, you probably don’t won’t like this post.

I appreciate the practicality of HTML: HTML is ubiquitous, so nearly every developer already knows it. Nearly every editor and IDE already syntax-highlights and auto-formats HTML. But I don’t care for HTML’s syntax. On some projects, I use tools that offer an alternative, simpler syntax for the underlying HTML/DOM data model.

Indentation-Based HTML Alternatives

The most popular HTML alternative syntax is a Python-like indentation-based syntax, in which element nesting in determined by start-of-line white space. Implementations of this idea include Jade, HAML, and Slim.

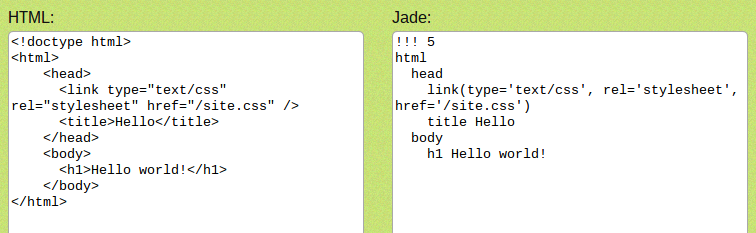

Of those, Jade seems the most polished, and there are multiple Jade implementations available: the original (JavaScript, for use in Node or the browser), Java, Scala, Python, PHP, and possibly others. Jade looks like this:

There is a free, helpful HTML to Jade converter online thanks to Aaron Powell, shown in the above image. It can be used to translate HTML documents or snippets to Jade in a couple of clicks.

Non-HTML HTML Advantages

- Jade (and other tools) trivially do lot of things right, like balanced tags, with no need for IDE support.

- Generated HTML will automatically be “pretty” and consistent

- Generated HTML will always be well formed: Jade doesn’t have a way to express unbalanced tags!

- Very concise and tidy syntax.

- Cleaner diffs for code changes – less noise in the file means less noise in the diff.

Non-HTML HTML Disadvantages

- Another Language to Learn: People already know HTML; any of these tools is a new thing to learn.

- Build Step: any of these tools needs a build step to get from the higher level language to browser-ready HTML.

- Limited development tool support – you might get syntax highlighting and auto-completion, but you will need to look around and set it up.

Non-Arguments

- There is no “lock in” risk – any project using (for example) Jade as an alternative HTML syntax, could be converted to plain HTML templates in a few hours or less.

- Since it is all HTML at runtime, there is no performance difference.

Conclusion

On balance, I think it is a minor win (nice, but not indispensable) to use a non-HTML HTML syntax. At work, we do so on many of our projects..